The LLM Disclosure Index: How Much We Really Share With AI

Tracking how much sensitive or personal information users disclose to AI assistants across business and everyday contexts.

Disclosure trends across business and everyday AI usage

The LLM Disclosure Index: How Much We Really Share With AI

When we open up to AI assistants, how much of our inner world are we actually revealing?

That’s the question behind the LLM Disclosure Index—a way of tracking how many users of large language models (LLMs) disclose sensitive or personal information in their interactions. Unlike usage statistics that measure how often people use AI, the disclosure index looks at what they’re willing to share.

Why Disclosure Matters

LLMs have become more than productivity tools—they’re increasingly confidants, consultants, and companions. That utility hinges on disclosure: the richer the input, the more useful the output. But disclosure also creates risk: sensitive personal data or confidential work information may be stored, analyzed, or exposed in ways users didn’t intend.

Understanding disclosure helps us balance those forces. It’s a proxy for trust, risk tolerance, and the perceived value of AI.

Two Lenses: Business vs. Everyday

To keep things simple, we track disclosure across two groups of LLM users:

- Business Users – people using AI in professional or workplace contexts

- Everyday Users – people using AI in their personal lives, from health queries to chatting with AI companions

The Numbers So Far

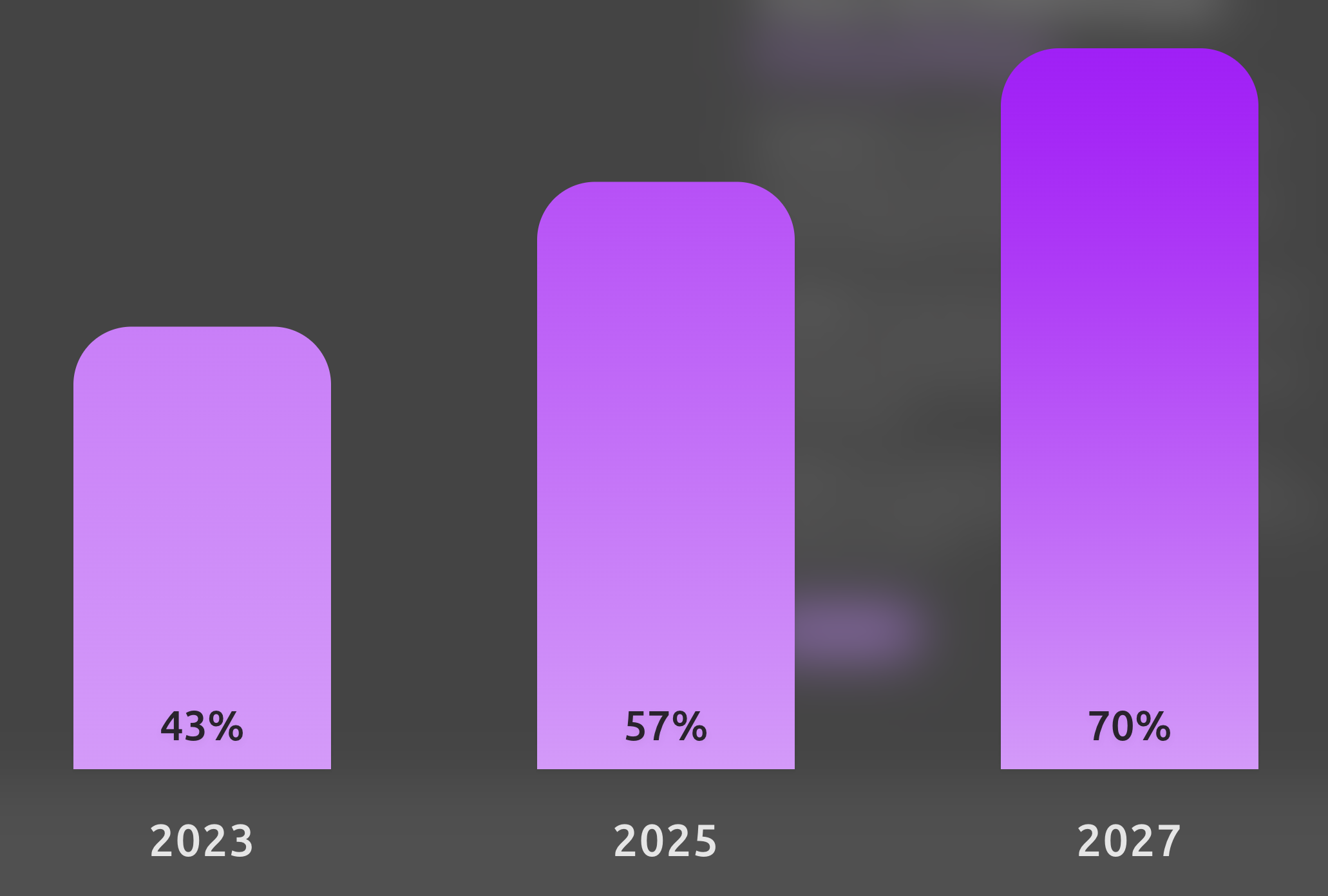

Business Users

- 2023: 43% of workplace users disclosed sensitive company data to LLMs (Cyberhaven).

- 2025: 57% admitted sharing confidential work information with GenAI tools (TELUS).

- 2027 (projection): Likely to plateau around 70%+ as AI is embedded in workflows and benefits outweigh risks.

Everyday Users

- 2023: Reliable large-scale data not yet available; adoption was still dominated by early adopters.

- 2025: About 41% of users report entering sensitive personal data—health, finances, or other private details (Cisco Consumer Privacy Survey; Tran et al., 2025).

- 2027 (projection): Disclosure expected to rise to ~50% as AI becomes more capable and trusted in everyday life.

The Big Picture

| Year | Business Disclosure | Everyday Disclosure |

|---|---|---|

| 2023 | 43% | 28% |

| 2025 | 57% | 41% |

| 2027 | ~70% (proj.) | ~55% (proj.) |

What the Index Tells Us

- Business users lead disclosure. Employees consistently share more than everyday users, despite privacy risks and corporate warnings. Productivity pressures win.

- Everyday users are catching up. As AI grows more persuasive and useful, personal disclosure is set to rise—especially around health, relationships, and finances.

- Trust vs. caution. Disclosure is shaped by a tug-of-war: increasing utility and familiarity drive sharing up, while privacy awareness and guardrails pull it down.

Looking Ahead

The LLM Disclosure Index isn’t a perfect measure, but it highlights an important truth: AI assistants are becoming places where we reveal more than we might expect.

For businesses, this raises urgent questions about data protection and compliance. For everyday users, it’s about balancing convenience with privacy. For society, it’s about recognizing that disclosure is both a marker of trust—and a potential vector of harm.

As AI continues to evolve, so will what we share. The LLM Disclosure Index will help track just how far that trust—and risk—goes.